Features

gRPC Bridge

Real-time bidirectional gRPC bridge between Python agents and the C# game engine. Non-blocking, ~25 ticks/sec.

Gymnasium API

Standard reset/step interface via OpenEnv. Drop-in compatible with RL training frameworks like TRL and Stable Baselines.

Rich Observations

9-channel spatial tensor, per-unit stats (facing, stance, range, veterancy), economy, military scores, and fog of war.

21 Action Types

Move, attack, build, train, deploy, guard, set stance, transport loading, power management, and more.

Multi-Agent Support

Scripted bots, LLM agents (Claude/GPT), and RL policies. Pre-game planning phase with opponent intelligence.

Docker & Headless

Null platform for headless training at 3% CPU. Docker Compose for one-command deployment.

Simple, Familiar API

OpenRA-RL follows the standard Gymnasium reset/step pattern through the OpenEnv framework. Connect to a running OpenRA game, observe the world, and issue commands — all through async Python.

Compatible with TRL, Stable Baselines3, and any framework that speaks Gymnasium.

Read the Docsfrom openra_env.client import OpenRAEnv

async with OpenRAEnv("http://localhost:8000") as env:

obs = await env.reset() # Start a new game

while not obs.done:

action = agent.decide(obs) # Your agent logic

obs = await env.step(action) # Execute and observe

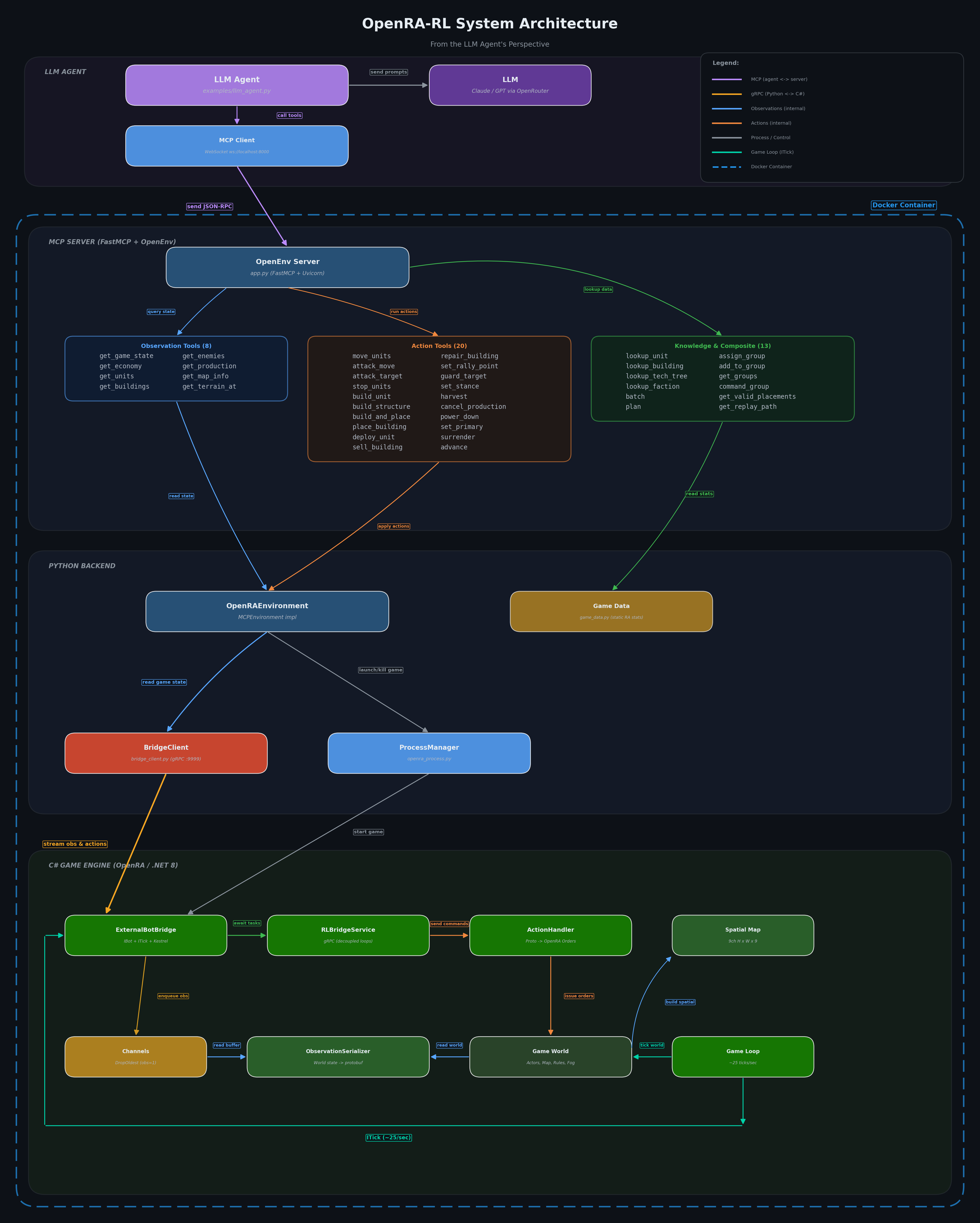

Architecture

Three components work together: a Python environment wrapper, a gRPC bridge running inside the OpenRA game engine (via ASP.NET Core Kestrel), and the OpenEnv framework for standardized agent interaction.